If you want to determine how effective/valuable each URL is on your website in terms of SEO authority, quality of content and conversion opportunity, this guide can help you gather the data you need, which can act as a nice supporting document for an overall content audit document.

In this guide I specifically look at how to map Analytics (or Ahrefs, Social Share etc) data with a list of crawled URLs.

Before you start please bare in mind:

- I use Deep Crawl in this example. Other apps like Screaming Frog will provide similar data

- DeepCrawl does export 30 days’ of organic visitor data in a Universal Crawl, however if you require more sample data from different channels/segments its worth going through the process in this guide

- You could do the opposite of this process and run Analytics landing page data through a crawl tool (DeepCrawl have a great post on this ) however, I want to see how every pages on the site does, including the ones that have zero visits, so I opted for the process in this guide

So part of this audit involves me gathering some of the following data metrics for each existing URL:

- Organic landing page visits (Analytics)

- Total organic visits (Analytics)

- Total traffic visits (Analytics)

- Total traffic conversions (Analytics)

- Total organic conversions (Analytics)

- Word counts (DeepCrawl)

- Page authority (DeepCrawl/Ahrefs)

- Optimisation good? (Top level manual audit)

- Design good/bad? (Top level manual audit)

- A few more metrics…!

Gathering the data

Crawl Data

The data from DeepCrawl is easy to get (run a Universal crawl, then go to “Reports” – “Indexation” – “All URLs” and download CSV. Screaming Frog and other local/server running apps will also provide most of this data, however I tend to use DeepCrawl because it runs on a server, offers a lot more data and is way more affordable than other similar platforms.

Analytics

So I need my Analytics data next which is pretty straight forward. I use a sample of 12 months and download all of the traffic source and conversion CSVs.

At this point you might be saying “why don’t you just export the analytics data and run that through a List Crawl in Screaming Frog/Deep Crawl!?” – Well I need to see if some of the existing pages on the site have zero visits, and some pages will not have organic visits vs direct/campaign visits, so its better to lead with the list of crawled pages.

Match Analytics data to the crawl data

Here are the steps I took to achieve this:

Step 1:

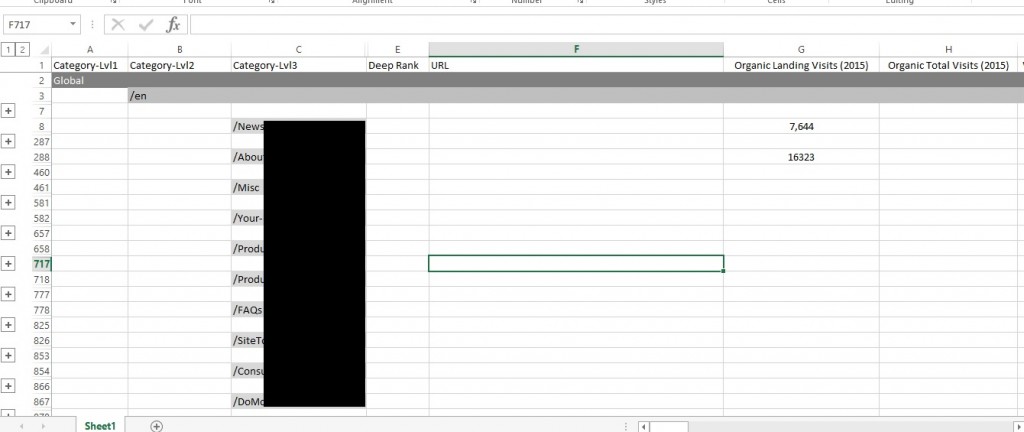

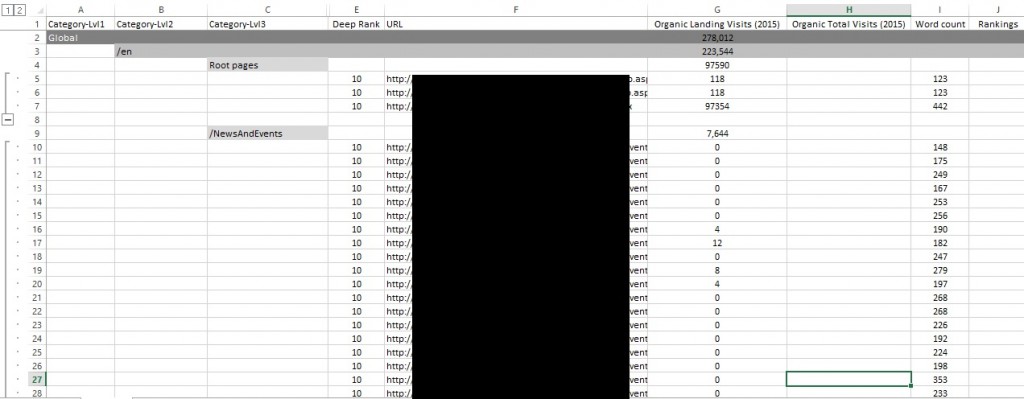

I created a master Excel sheet listing my crawl data, segmenting each category and sub-category of the site by using headings and the “Grouping” function to make it easier to work with and presentable.

Step 2:

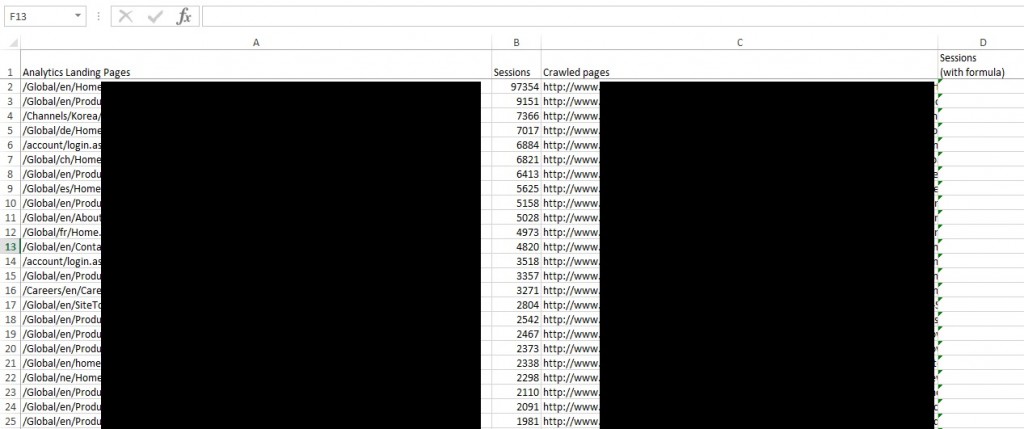

Create a separate excel sheet to list, side by side:

- The URLs found in analytics and their visitors numbers

- The list of crawled URLs

Step 3:

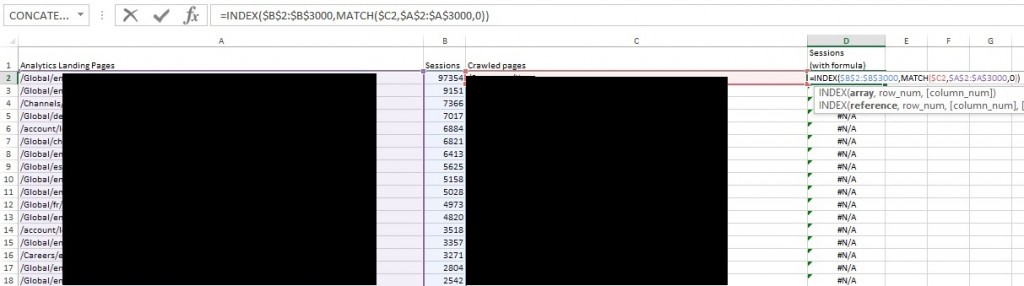

Create the Excel function I need in the cells under the “D” column in the example above, to grab the session data for each URL from the Analytics list.

For this I used the Excel “INDEX” and “Match” functions. This combined function will look for the crawled URL within the GA URL list and return the session data next to each crawl URL.

Here’s the formula I used with example below of how each cell is referenced:

=INDEX($B$2:$B$3000,MATCH($C2,$A$2:$A$3000,0))

I used this great guide to figure out this Excel formula, I recommend referring to it: https://www.ablebits.com/office-addins-blog/2015/08/26/excel-compare-two-columns-matches-differences/#compare-colums-row-by-row

Step 4:

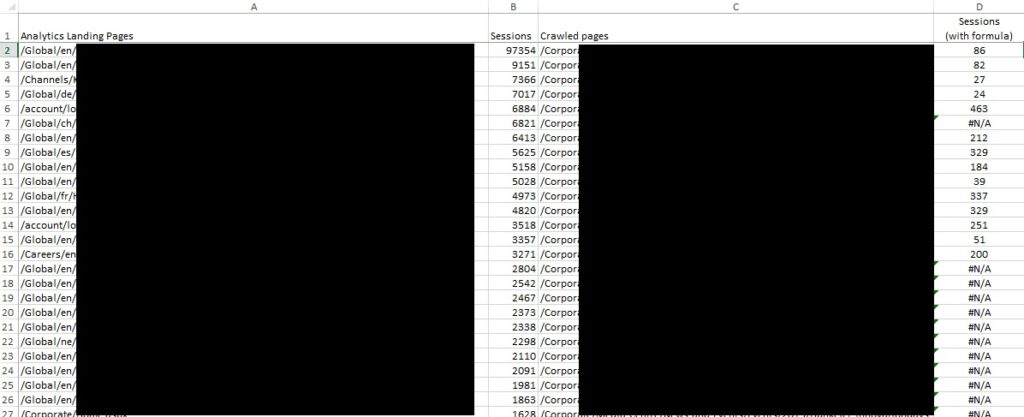

You will notice that the URLs from Analytics strip out the domain, so I did the same for the Crawl list with a find and replace in excel in order for both sets of data to match.

Once I did this, I started to see the matching session data, some URLs do not have values and so you get “#N/A” – once I past this data into my master sheet I will do a Find and Replace in excel to replace #N/A with “0” – to make it easier for me to add up all values afterwards.

Step 5:

Paste the new visitor figures into the master Excel sheet, replace “#N/A” values with “0” and do a =SUM function to add up all visitor data for each section as a nice summary above each.

Result

I have a master Excel sheet that lists individually all visitor data from analytics for each currently existing URL on the site, with summary figures above each segmented category.

Now I need to add more analytics and Ahref’s data and highlight good/bad results quickly using some conditional formatting – so this is where I leave you, hope this helped!