The Technical SEO Audit Essentials Guide

Many people today do not realise the basics of technical SEO are not as complex as you may think and in most cases, you may not need a developer. Whilst some people believe ‘technical SEO is just makeup’ and an attempt to make your site pretty for Google, it’s much more beneficial and is integral to your SEO strategy.

Why is technical SEO important? Technical SEO is made up of everything that helps search engines such as Google and Bing crawl your website effectively and covers pretty much anything from your page titles and meta data to crawl errors and canonical tags. So, if Google cannot crawl your site, you aren’t going to get as much organic traffic as you should!

In this technical SEO audit essentials guide, we’re going to take you through the basics of what you need to know and do to improve your website from a technical perspective, and why you should fix these issues as soon as possible! There are many aspects to a technical SEO audit, but by creating a checklist and implementing a strategy then your website will be well on the way to being structurally sound and you’ll be in a much better position to rank well.

If you only need information on a specific issue, then you can use this contents table to easily navigate throughout the guide.

- 1.1 Preferred Domain

- 1.2 Structured Data

- 1.3 Mobile Usability

- 1.4 Index Status

- 1.5 Crawl Errors

- 1.6 Robots.txt

- 1.7 Sitemaps

- 1.8 URL Parameters

- 1.9 PageSpeed

- 2.1 Page Titles

- 2.2 Meta Description

- 2.3 Canonical Tags

- 2.4 Pagination Tags

- 2.5 Duplicate Pages

- 2.6 Images and Rich Media

- 2.7 302 Redirects

- 2.8 URL Structure

- 3.1 Site Crawl Tools

- 3.2 Google Tools

- 3.3 External Duplication Tools

- 3.4 Other Tools

1. Search Console

If you’ve not already got it set up, Google Search Console should be the first thing you do after reading this. Setting up your Search Console account will give you a wealth of data to analyse how Google is currently indexing and displaying your website as well as flag up any immediate issues Google comes across. You need to set up your Search Console property for all variations of your website. So, that’s with and without the www. in the URL, E.G. www.example.com and example.com

1.1 Setting your preferred domain

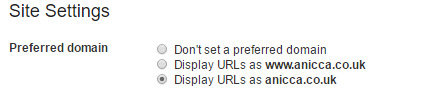

Once your Search Console is set up and you have a property for each variant of your domain name, the first thing you need to do is set up your preferred domain. This should be set to the URL your homepage displays as default.

Why should you fix this?

You need to set this to ensure that Google displays the right type of URL for you. If your website is all www.example.co.uk you don’t want Google to treat example.co.uk as your primary domain as this is going to cause indexation issues as well as use up crawl budget.

1.2 Structured Data

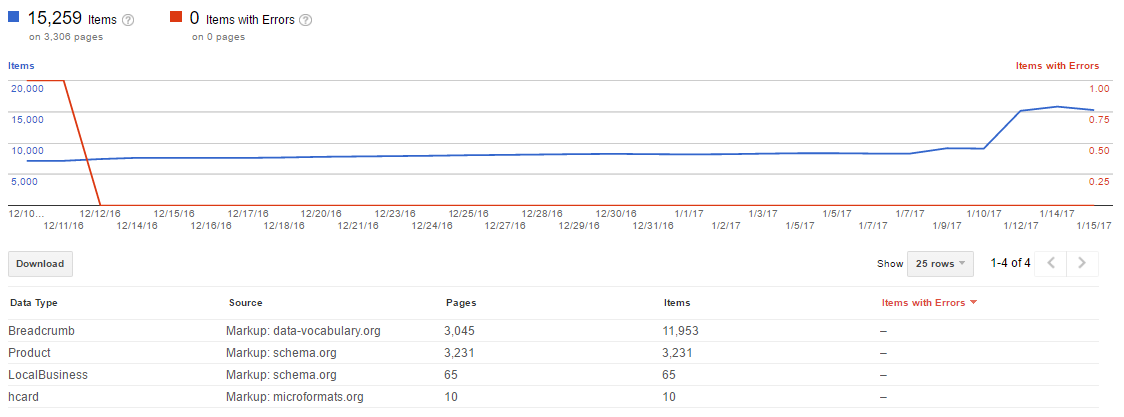

If you’re using structured data on your website, then your Search Console account will pick this up, along with any errors in the current markup. There are plenty of structured data types you could use for your website. Take a look at our schema blog post to learn more about it.

Why should you fix this?

If you have items with errors, then it’s important to get these looked at by your developer as it may limit the effectiveness of your structured data and means Google may not be able to read or display the markup and this can negatively affect click-through rate in the search engine results pages (SERPs).

1.3 Mobile Usability

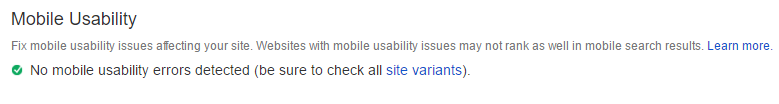

By now, your website should be mobile friendly. As most searches now take place on a mobile device, not having a mobile friendly website is more important than ever, Google are even making the switch to use mobile sites as their primary index. To see why it’s so important, you’ll want to look at Googles mobile first indexing blog post.

Why should you fix this?

There are a range of different mobile usability issues you’ll come across, again, this should be looked at by your developer because, as you’ll read in the blog post linked above, Google is going to index websites primarily based on their mobile versions. The mobile index will be the main, most up to date index, with the desktop index having fewer updates.

1.4 Index Status

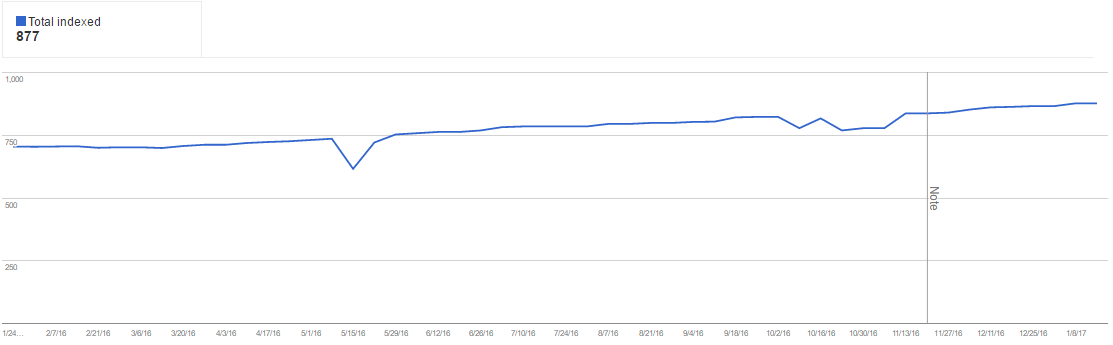

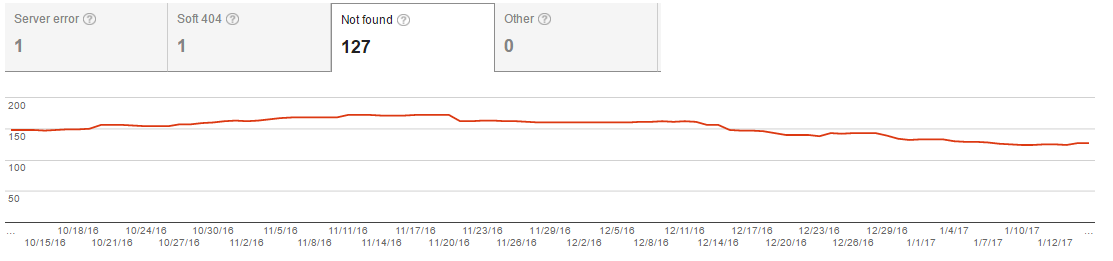

The next thing to check is your index status. Your index status shows you how many pages Google currently has indexed. With this line graph, you should be aiming for a steady and consistent line, as this shows Google is not having troubles indexing the website. If you are adding new pages, content or products to your site, it’s also useful as you should see an increase on the graph as Google indexes your new pages. If you find anomalies such as peaks and troughs, it may be that Google is having trouble indexing your website. Reasons for this could vary, such as duplicate pages, incorrect canonical tags or issues with your robots.txt file. If you do see anomalies, then you can align this with key algorithm updates to determine if your website is affected. If you’re migrating your website, you should also use this to monitor the crossover and make sure there are no lost pages.

Why should you fix this?

As mentioned, the index status graph is a great indicator of your websites health. Peaks and troughs in your data shows Google is struggling to consistently index your website and your performance in the SERPs is going to be massively limited. The below graph is a good example, there is a steady increase in pages as content is created and uploaded to the website.

1.5 Crawl Errors

Crawl Errors in Search Console consist of server errors (500 Errors) and page not found errors (404, 403 Errors). This shows where, for one reason or another, Google could not display the destination page. For consistent server errors, you should contact your hosting company and check the server logs to ensure the site is being displayed and working properly. 404 errors should be addressed by yourself where possible. This can be done by redirecting broken pages to other relevant pages.

Why should you fix this?

404 errors relate to pages that are linked to but no longer exist and you may be losing valuable authority and crawl efficiency. 404 pages need to be 301 redirected to another landing page on the site. It is a common mistake to redirect these broken pages to the homepage, but this serves no value to the user or Google. For best practice, redirect the pages to the closest topical page. It’s not always possible to resolve all the 404 errors and it’s likely that all domains will have 404 errors. You should download these errors and prioritise them based on where in your website the errors occur.

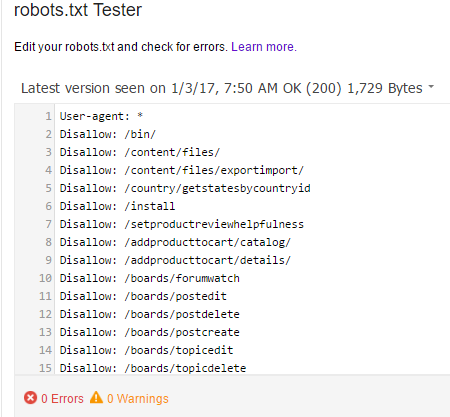

1.6 Robots.txt

The robots.txt tester in Search Console displays the robots.txt file for your domain as it sees it. You can also test new rules here before you upload it to the live file. It will flag up any errors and warnings. Once you have submitted your robots.txt file to Google, any issues will be flagged by Google and the appropriate action taken.

The robots.txt tester in Search Console displays the robots.txt file for your domain as it sees it. You can also test new rules here before you upload it to the live file. It will flag up any errors and warnings. Once you have submitted your robots.txt file to Google, any issues will be flagged by Google and the appropriate action taken.

Why should you fix this?

You need to fix these issues as it might be that Google is having trouble following specific rules or your robots.txt file is not configured properly. A simple incorrect entry could result in Google leaving your whole website out of the SERPs.

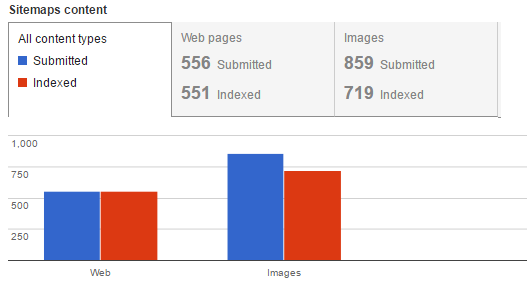

1.7 Sitemaps

You should submit all the XML sitemaps for your domain to your Search Console account. The sitemap section of your account will let you track how many pages you’ve submitted in your sitemap against how many pages Google is indexing. These two numbers should be as close as possible. If there are large discrepancies, you need to audit your XML Sitemap to ensure the pages in your sitemap are up to date and indexable.

Why should you fix this?

Fixing this will ensure Google is indexing the right pages on your website. If your sitemap is up to date but only having 20% of the pages indexed this will help you to initially identify an issue and act on it quickly. If you do have any issues, possible steps to resolve these issues include identifying pages that are, or could be no-indexed, parameters or disallowed URLs.

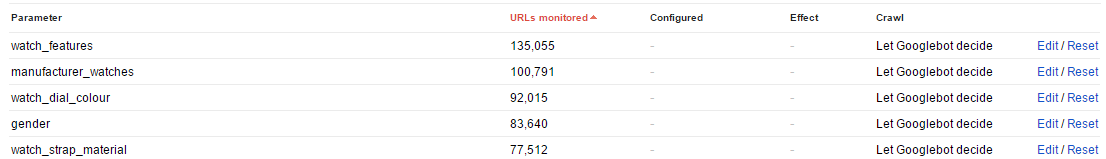

1.8 URL Parameters

Parameter URLs can be identified by a question mark. For example, if you have a web store with a clothing category such as www.example.co.uk/clothing, you might have filters on the page to refine by T shirts, shorts and jeans. If a user filters by jeans the URL might read www.example.co.uk/clothing?type=jeans. The parameter in this URL is ?type=jeans.

Why should you fix this?

You should work your way through these and you can tell Google to ignore these URLs or let Google decide whether to crawl them or not. This will help your crawl budget and allow Google to focus on crawling the pages it needs to. If you’re comfortable using this advanced feature, set the parameters and whether to let Google crawl them. As well as configuring them in Search Console, parameters that do not need to be crawled can be disallowed in the robots.txt file

1.9 Pagespeed

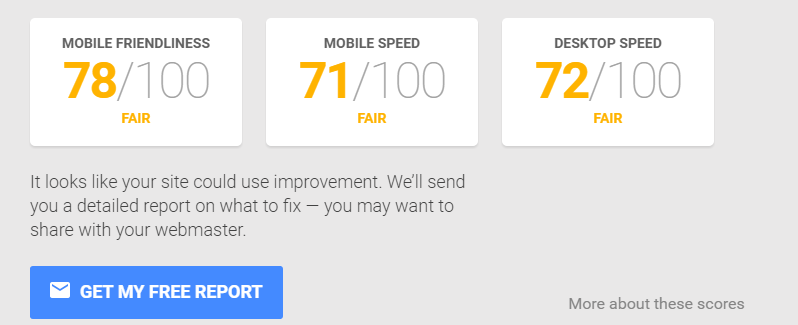

Pagespeed is becoming an increasingly important part of your website not only for rankings but also for usability, in today’s world people expect web pages to load up instantly and be user friendly. Google states on average people will leave a site if it’s not loaded within 3 seconds, which is also reflected by the introduction of AMP pages to Googles index.

Why should you fix this?

According to Google, nearly half of all visitors leave a site if it’s not loaded within 3 seconds, so be sure to use the PageSpeed Insights tool and the Test My Site tool to get the scores for your website. Scores are done by URL rather than the whole website speed. You also get a free report to show you what needs fixing. Slow loading pages may have a high bounce rate, so use Analytics to see which pages are receiving the highest bounce rate, you can then test the pages in the pagespeed tool and see how they compare to low bounce pages. Generally, you should be aiming for at least 85/100 to qualify as a good load speed, so the example below could be improved by optimising images and improving JavaScript.

2. Crawling Your Site

Once you’ve exhausted the data you can gather from Search Console then it’s time to look at using one of the many tools available to crawl your site to find further issues. For smaller sites, under 500 pages, you can use a free tool called Screaming Frog, which is without a doubt the go-to tool for most marketers looking for technical issues on their website. For websites over 500 pages, you can download a licence for £149 a year which lets you crawl unlimited URLs and get access to advanced tools. There are a host of other tools available, which we will list at the end of the guide. Below, is a list of common technical issues you may come across when crawling your website and why you need to fix them. Our personal choice of tool at Anicca along with Screaming Frog is Deepcrawl. Deepcrawl gives you a comprehensive list of issues with the website in easily digested topics and allows you to go through each issue one by one.

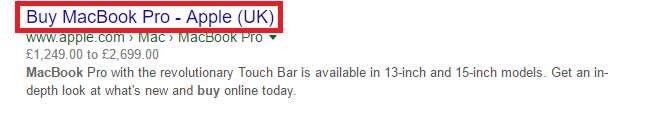

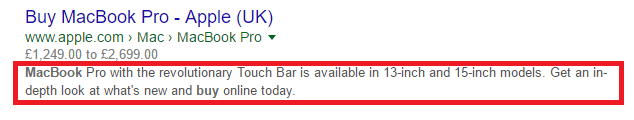

2.1 Page titles

Title tags are a big call to action in the SERPs and can also help influence how you rank. To ensure that your page titles are to best practice, make sure they are unique to each page and are related to the content on the page. The ‘safe zone’ for page titles are between 55-59 characters.

Why should you fix this?

If you have page titles which flag up as too long then be sure to fix them, this is so they aren’t truncated in the SERPs. For most websites, this will just require you to log in to your CMS system and manually edit titles. If you’re using WordPress, then a plugin such as Yoast will let you know when you’ve exceeded the max character length. Whilst not as influential as they once were, page titles are still a very important aspect to your SEO and is one of the basic things you should be doing.

2.2 Meta Descriptions

Your meta data is also important as the description is the snippet of text which shows up in the SERPs under your page title.

Why should you fix this?

You need to ensure these are optimised to maximise the click through rate of your listings. Make sure they’re under 155 characters in length and they contain information about the page and a call to action.

2.3 Canonical Tags

Canonical Tags might be something you’ve not heard of before. They are tags that are put into the head section of your HTML which help bots such as Googlebot identify the original content. They are especially useful for e-commerce sites where you might have a page for different sizes of a product.

Why should you fix this?

If you have the exact same product available in 3 different sizes, all of which have their own product URL, your site will struggle to rank for that product because the pages are fighting with each other. You should choose one product to be the one you want to rank, and add a canonical tag to the other products pointing to the original. For example, if you have a specific t shirt which you have available in small, medium and large you could put a canonical tag on all three pages that goes to the most popular, such as:

Therefore, the Large t shirt is what would appear in the SERPs. This is going to help your product rank well, as well as improving crawl and index efficiency.

2.4 Pagination Tags

Pagination tags are useful for any similar pages or groups of pages on your website. Although quite similar, they work in a slightly different way to canonical tags. Pagination tags are used to link a series of content. A common use of pagination tags is on category pages for ecommerce stores. Where you have a category page with multiple pages of products, for example, www.example.com/shorts, www.example.com/shorts?page=2, www.example.com/shorts?page=3. You would add pagination tags to these pages to show Google that the pages are all linked and not duplicate pages.

Why should you fix this?

Having similar pages which are not defined by pagination tags may result in Google penalising your website for duplication. By adding pagination tags to pages you’re telling Google that these pages are part of the same group. This is going to improve your crawl and index efficiency and ensure Google doesn’t wrongly flag up duplicate pages on the website.

2.5 Duplicate Pages

Duplicate pages can be caused by multiple versions of your site being found, such as with and without www, http and https, or even if you just have two pages with very similar content. This creates a problem because Google will not know which page to rank, you’re also diluting the authority of the page by having two pages on one topic.

Why should you fix this?

You need to ensure there are no duplication issues on your website. Duplicate pages will essentially fight each other to rank on Google and splitting the authority. If you fix the duplicate pages, then just one of the pages will receive 100% of the authority and have a better chance of performing well. You can use canonical tags and 301 redirects to resolve duplication issues and tell Google which pages are primary pages.

2.6 Images and Rich Media

Images and rich media are a large portion of all websites. It’s important to make sure that your images are optimised for web and contain all the right information such as ALT tags.

Why should you fix this?

Images that are not optimised for the web are one of the most common causes of slow loading websites. Optimising images so they’re smaller in file size and uploaded in the size they are displayed at will help your site run faster. Adding an ALT tag will also help Google understand what the images is showing and gives more context and relevancy to a page.

2.7 302 Redirects

302 redirects are temporary redirects. They are used to point to a temporary alternative page, passing on little to no authority, for example when your site is down or developers are working on a part of the website. They should never be used permanently, except in cases where necessary, such as when generated by social sharing plugins installed on your website.

Why should you fix this?

Generally, temporary redirects do not pass authority like a permanent 301 redirect does, however, recent comments by John Mueller, webmaster trends analytics at Google, it has been said that in some cases 302 redirects are treated the same to 301 redirects. To be sure, if you’ve permanently deleted pages from your site then they need to be 301 redirected to ensure any SEO authority they have is passed through to relevant pages and not lost.

2.8 URL Structure

When crawling your site, it is a good time to analyse your URL structure. How is your website laid out structurally? Is the folder structure easily readable and does it make sense? A user should be able to understand what they’re going to by looking at your URL. Also, aim to keep URL’s to an 80-character limit to avoid truncation in SERPs.

Why should you fix this?

If your URL structure is all on one flat level and all pages are at the root then Google will see all these pages as being the same importance, as well as making it more difficult to see where on a site pages lie. You should aim to get your URL structure as follows:

www.example.co.uk/category/sub-category/product

For lead generation sites, you should follow a similar layout to the e-commerce example based on your services.

3. Tools

There is a plethora of tools available for marketers and webmasters available today. Unlike years ago, these tools are relatively cheap if you’re only using it to monitor your own website. In some cases, you can conduct in-depth technical assessments and audits of your website without the need to spend a penny on tools. We’ve rounded up some of our favourite go to tools that you can use to resolve your technical SEO issues.

3.1 Site Crawl Tools

DeepCrawl

DeepCrawl is our tool of choice and something we use day in, day out here at Anicca. Deepcrawl will crawl your website to identify SEO issues. It provides a huge amount of easily digested data and is laid out so virtually anyone would be able to crawl and analyse their website! DeepCrawl is a paid-for tool and starts from £55 a month, based on your needs. Find out more about DeepCrawl here. DeepCrawl will find a whole host of issues with your site from page titles which exceed the maximum width to non-301 redirects, orphan pages with organic traffic and much more.

Screaming Frog

Screaming Frog is a free to use SEO spider tool. This will crawl a website and pick out individual elements we spoke about such as page titles, descriptions, images, H1’s and H2’s allowing you to easily see any that do not meet Googles recommended criteria. There are limitations to the free version of the tool, which will only let you crawl 500 pages, however a year’s licence for the product will give you unlimited access will only cost you £149 a year, it’s probably the best tool you can get for the price and is the go to tool for SEO’s and agencies across the world.

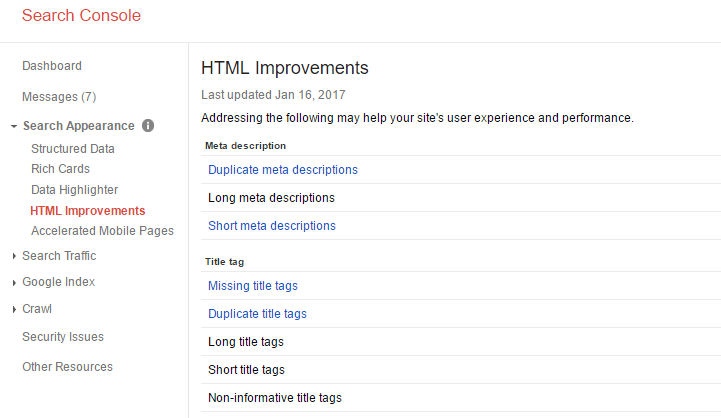

Top Tip

If you do not want to commit to a tool, then your Search Console account will highlight some of the more basic HTML improvements such as duplicate, short and long page titles, as well as the same for your meta descriptions. Search Console will keep a historical record, so if you’ve fixed the issues they do need to be marked as fixed.

3.2 Google Tools

There are plenty of Google tools to sink your teeth into, but here are the main ones you should look at.

Search Console – The health centre for your website.

Pagespeed Insights – Test how fast you site is and get a recommendation on what can be fixed to improve pagespeed of your website.

Test My Site – Find out how your website performs on both desktop and mobile devices.

Structured Data Testing Tool – The structured data testing tool allows you to check the markup on a page, or you can directly upload your code before adding it to your website to ensure it marks up correctly.

Tag Assistant – A Chrome plugin, which will display any Google tags you have installed on the page, as well as any issues from tags that’re not installed correctly.

3.3 External Duplication Tools

If you want to check for external duplicate content, then take a look at Copyscape and DMCA which will crawl your sites content and compare it to other content on the web to see if your content has been copied anywhere or is very similar. You can then take the appropriate action.

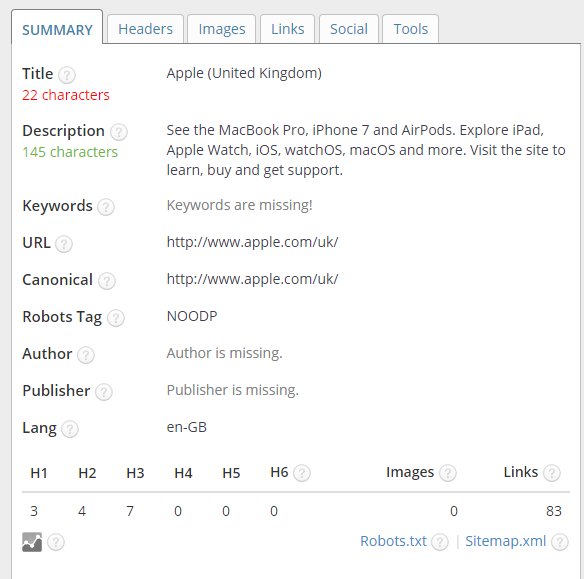

3.4 Other Tools

There is no shortage of technical SEO tools available, and plenty are free. To make your life that little easier we’ve put together some other nifty little tools you can use to easily find issues.

Siteliner – Siteliner is a tool to analyse internal duplication on your website. It is free for 250 URLs and gives you a basic indication and percentage of duplication.

Redirect-Checker – This tool will show you the status of a page, whether its 301 redirecting, 302 redirecting, 404 not found or even in a redirect chain.

SEO META in ONE CLICK – This is a chrome extension that you can use to easily see the main elements of your pages to ensure they fall within Googles guidelines.

Panguin Tool – The Panguin tool uses your analytics data and allows you to see traffic levels against Google algorithm updates. If you think you’ve been affected by Penguin or another algorithm, then this tools make it easier to diagnose.

GA Checker – GA Checker is a tool you can use to crawl your website and find any pages that may be missing the analytics tracking code, perfect to ensure you aren’t losing out on any data!

Conclusion

Technical SEO is like building the foundations to a house, they might not be visible but its integral to the structure of your website and you will often find that by fixing some of the larger technical issues you could even see an initial boost in traffic before you begin implementing ongoing SEO activities.

These are some of the most common technical SEO issues you will come across when initially auditing your website and hopefully this guide has given you an insight in what to set up, check and fix. By starting with these issues your website will be set up with the best chances of performing well in the SERPs and you’ll be ready to start digging deeper into your website to find more advanced issues.

The technical SEO team at Anicca has years of experience dealing with issues like this plus vast amounts of knowledge of more technical problems. As you can see from this guide, a lot is involved in the audit process, this is just the starters! If you do not have a capacity in your business to take on these tasks, or you’re launching a new website and want to ensure you don’t negatively impact your current SEO then get in touch with us.